The third term in Equation 7 leads to undesired second-order terms, such as fr + fh and 2fr, which fall within the vital signal spectra. If not accounted for, these undesired terms lead to an incorrect estimate of the vital signal. This poses a challenge to accurately estimate vital parameters. The other terms in Equation 7 lead to the expression

Considering that Rk>> , the second term in Equation 8 can be ignored. The first term is estimated or compensated by the fast time FFT and is proportional to the human’s base distance Rk. The change in phase over slow time, given by the fourth term, represents the minute motion generated from the human chest wall. Thus, by monitoring the phase, followed by spectral analysis, one can estimate the non-overlapping heart and respiratory frequencies. However, the estimate of these minute movements is susceptible to random body movement and human motion. This poses another challenge to wide acceptance of the technology, where precise vital measurements are mandatory. Random body movement can be compensated by multiple radar sensors.14

, the second term in Equation 8 can be ignored. The first term is estimated or compensated by the fast time FFT and is proportional to the human’s base distance Rk. The change in phase over slow time, given by the fourth term, represents the minute motion generated from the human chest wall. Thus, by monitoring the phase, followed by spectral analysis, one can estimate the non-overlapping heart and respiratory frequencies. However, the estimate of these minute movements is susceptible to random body movement and human motion. This poses another challenge to wide acceptance of the technology, where precise vital measurements are mandatory. Random body movement can be compensated by multiple radar sensors.14

The ability of the radar to wirelessly estimate vital signs leads to applications such as sleep apnea detection, patient monitoring, presence sensing, driver monitoring and physiological monitoring in surveillance and earthquake rescue operations.15-19 Short-range radar systems that are lightweight and low-cost offer good solutions for human presence sensing with efficient energy utilization.20-21

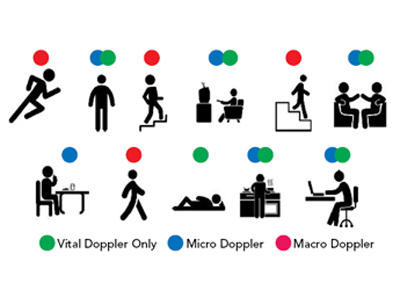

Figure 5 Human activities with associated Doppler sensed by the radar.

We can divide the Doppler components into three categories: macro Doppler induced by major body movement, micro Doppler induced by human gestures and vital Doppler induced by vital motion. Figure 5 illustrates various indoor human activities and their associated Doppler components that can be sensed by the radar. A radar sensor can identify these different Doppler components distinctly, offering a ubiquitous solution for occupancy sensing.21 Other sensors using passive infrared (PIR) or ultrasound, which detect presence from motion, fail during some daily activities such as sitting, reading or sleeping.

Classification Sensing

Radar can capture unique signatures of a target based on target velocity, size, shape, smoothness, reflectivity and orientation, as well as horizontal and vertical polarization properties. These properties can be extracted with appropriate feature engineering algorithms developed for classification and sensing.

Consider an example classifying materials such as carpet, tile, laminate and water using a mmWave radar. Figure 6 shows various materials with their range/cross range images generated through Capon-based MIMO imaging principles. One of the challenges to classification sensing for industrial and consumer applications is scalability, due to sensor-to-sensor variability from fabrication uncertainties such as wafer lots and manufacturing artifacts. Classification models trained on one group of sensors do not necessarily work for other groups of similar sensors. The change of sensor casing enclosing the radar can influence the back-scattered signal, introducing variations in classification features that result in classification errors. When the classification model is developed using certain material types and radar orientations, the model fails to scale for a different sensor orientation or type of material, such as one carpet made of nylon and another of olefin. It is impossible to train the sensor system to account for all these variations.

Figure 6 Materials and their range/cross-range features: carpet (a), tile (b), laminate (c) and water (d). Note differing scales.

One of the approaches to circumvent these challenges is “one-shot learning,” inspired by the word2vec embedding concept for natural language processing and, lately, for human face classification.22-24 Instead of using deep neural networks to train as a multi-class classifier, one-shot learning determines similarity between two materials by projecting the input features to a d-dimensional embedding space. Typical values of d are 16 or 32 for use cases such as this.25 One-shot learning uses a Siamese network trained using two identical neural networks with the same weights; the last layers of the neural network are fed to a contrastive loss function. The network tries to match the anchor (current feature image) with the positive (feature image that is in theory similar with the anchor), as far as possible from the negative (feature image that is different from the anchor). One of the contrastive loss functions that the Siamese network is trained on is

where DSN is the Euclidean distance between outputs of the twin Siamese networks. Y = 1 if inputs are from the same class, and Y = 0 if they are from a different class. m > 0 is the margin hyper-parameter.

If, for example, there are 100 images of the four classes, then the total possible trainable pair of images are N =  = 79,800. The intuitive idea is that the deep network learns a d-dimensional space where similar materials are co-located and dissimilar materials are farther apart. During the inference phase, the input feature image from an unknown material is fed to the trained deep neural network to project the feature map into the d-dimensional embedding space and then classified through a nearest neighbor algorithm.

= 79,800. The intuitive idea is that the deep network learns a d-dimensional space where similar materials are co-located and dissimilar materials are farther apart. During the inference phase, the input feature image from an unknown material is fed to the trained deep neural network to project the feature map into the d-dimensional embedding space and then classified through a nearest neighbor algorithm.

Radar sensors such as Soli lead to emerging technologies such as gesture sensing.26-27 This technology is used in wearables and mobile phones to provide a natural and intuitive human-computer interface. Radar sensors such as RadarCat have proven to be extremely accurate in object and material classification of everyday objects and materials, transparent materials and different body parts.28 Recently, radar sensors have been successfully applied to fingerprint biometric identification. Unique radar signatures are fed through novel signal processing and machine learning models to identify a group of individuals with reliable accuracy.29

CONCLUSION

This article provides an overview of advancements in front-end and back-end technologies leading to the use of radar in consumer and industrial applications. Radar not only senses the 3D position of targets in its field of view, it enables sensing of human vital signals or signal classification as the fourth dimension, providing a different view of its environment where other sensors fail. The radar sensor is able to extract subtle information about targets in its field of view, making it suitable for a variety of industrial and consumer applications.

References

- Z. Peng, J. M. Muñoz-Ferreras, Y. Tang, C. Liu, R. Gómez-García, L. Ran and L. Changzhi, “A Portable FMCW Interferometry Radar with Programmable Low-IF Architecture for Localization, ISAR Imaging, and Vital Sign Tracking,” IEEE Transactions on Microwave Theory and Techniques, Vol. 65, No. 4, April 2017, pp. 1334–1344.

- A. Santra, R. V. Ulaganathan, T. Finke, A. Baheti, D. Noppeney, J. R. Wolfgang and S. Trotta. ”Short-Range Multi-Mode Continuous-Wave Radar for Vital Sign Measurement and Imaging,” IEEE Radar Conference, April 2018.

- G. L. Charvat, “Small and Short-Range Radar Systems,” CRC Press, 2014.

- C. Li, Z. Peng, T. Y. Huang, T. Fan, F. K. Wang, T. S. Horng, J. M. Muñoz-Ferreras, R. Gómez-García, L. Ran and J. Lin, “A Review on Recent Progress of Portable Short-Range Noncontact Microwave Radar Systems,” IEEE Transactions on Microwave Theory and Techniques, Vol. 65, No. 5, May 2017, pp. 1692–1706.

- Z. Peng and C. Li, “A 24 GHz Portable FMCW Radar with Continuous Beam Steering Phased Array,” Radar Sensor Technology XXI, June 2017.

- P. Hillger, R. Jain, J. Grzyb, L. Mavarani, B. Heinemann, G. M. Grogan, P. Mounaix, T. Zimmer and U. Pfeiffer, “A 128-Pixel 0.56 THz Sensing Array for Real-Time Near-Field Imaging in 0.13 µm SiGe BiCMOS,” IEEE International Solid-State Circuits Conference, February 2018, pp. 418–420.

- TSMC, www.tsmc.com/english/dedicatedFoundry/technology/logic.htm

- T. Fujibayashi, Y. Takeda, W. Wang, Y. S. Yeh, W. Stapelbroek, S. Takeuchi and B. Floyd, “A 76 to 81 GHz Multi-Channel Radar Transceiver,” IEEE Journal of Solid-State Circuits, Vol. 52, No. 9, September 2017, pp. 2226–2241.

- I. Nasr, R. Jungmaier, A. Baheti, D. Noppeney, J. S. Bal, M. Wojnowski, E. Karagozler, H. Raja, J. Lien, I. Poupyrev and S. Trotta, ”A Highly Integrated 60 GHz 6-Channel Transceiver with Antenna in Package for Smart Sensing and Short-Range Communications,” IEEE Journal of Solid-State Circuits, Vol. 51, No. 9, September 2016, pp. 2066–2076.

- J. Böck, K. Aufinger, S. Boguth, C. Dahl, H. Knapp, W. Liebl, D. Manger, T. F. Meister, A. Pribil, J. Wursthorn, R. Lachner, B. Heinemann, H. Rücker, A. Fox, R. Barth, G. Fischer, S. Marschmeyer, D. Schmidt, A. Trusch and C. Wipf, “SiGe HBT and BiCMOS Process Integration Optimization Within the DOTSEVEN Project,” IEEE Bipolar/BiCMOS Circuits and Technology Meeting, October 2015, pp. 121–124.

- S. Shopov, M. G. Girma, J. Hasch, N. Cahoon and S. P. Voinigescu, “Ultra-Low Power Radar Sensors for Ambient Sensing in the V-Band,” IEEE Transactions on Microwave Theory and Techniques, Vol. 65, No. 12, December 2017, pp. 5401–5410.

- A. Hagelauer, M. Wojnowski, K. Pressel, R. Weigel and D. Kissinger, “Integrated Systems-in-Package: Heterogeneous Integration of Millimeter Wave Active Circuits and Passives in Fan-Out Wafer-Level Packaging Technologies,” IEEE Microwave Magazine, Vol. 19, No. 1, January 2018, pp. 48–56.

- H. J. Ng, W. Ahmad, M. Kucharski, J. H. Lu and D. Kissinger, “Highly-Miniaturized 2-Channel mmWave Radar Sensor with On-Chip Folded Dipole Antennas,” IEEE RFIC Symposium,” June 2017, pp. 368–371.

- C. Li, Changzhi and J. Lin, “Random Body Movement Cancellation in Doppler Radar Vital Sign Detection,” IEEE Transactions on Microwave Theory and Techniques, Vol. 56, No. 12, December 2008, pp. 3143–3152.

- C. Li, V. M. Lubecke, O. Boric-Lubecke and J. Lin, “A Review on Recent Advances in Doppler Radar Sensors for Noncontact Healthcare Monitoring,” IEEE Transactions on Microwave Theory and Techniques, Vol. 61, No. 5, May 2013, pp. 2046–2060.

- C. Gu, “Short-Range Noncontact Sensors for Healthcare and Other Emerging Applications: A Review,” Sensors, Vol. 16, No. 8, July 2016.

- 17. C. Gu and C. Li, “From Tumor Targeting to Speech Monitoring: Accurate Respiratory Monitoring Using Medical Continuouswave Radar Sensors,” IEEE Microwave Magazine, Vol. 15, No. 4, June 2014, pp. 66–76.

- K. van Loon, M. J. M. Breteler, L. van Wolfwinkel, A. T. Rheineck Leyssius, S. Kossen, C. J. Kalkman, B. van Zaane and L. M. Peelen, “Wireless Non-Invasive Continuous Respiratory Monitoring with FMCW Radar: a Clinical Validation Study,” Journal of Clinical Monitoring and Computing, Vol. 30, No. 6, December 2016, pp. 797–805.

- C. Li, J. Lin and Y. Xiao, “Robust Overnight Monitoring of Human Vital Signs by a Non-Contact Respiration and Heartbeat Detector,” International Conference of the Engineering in Medicine and Biology Society, August 2006, pp. 2235–2238.

- E. Yavari, C. Song, V. Lubecke and O. Boric-Lubecke, “Is There Anybody in There?: Intelligent Radar Occupancy Sensors,” IEEE Microwave Magazine, Vol. 15, No. 2, March-April 2014, pp. 57–64.

- A. Santra, R.V. Ulaganathan and T. Finke, “Short-Range Millimetric-Wave Radar System for Occupancy Sensing Application,” IEEE Sensors Letters, Vol. 2, No. 3, September 2018.

- Y. Taigman, M. Yang, M. A. Ranzato and L. Wolf, “Deepface: Closing the Gap to Human-Level Performance in Face Verification,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, September 2014, pp. 1701–1708.

- F. Schroff, D. Kalenichenko and J. Philbin, “Facenet: A Unified Embedding for Face Recognition and Clustering,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, June 2015, pp. 815–823.

- G. Koch, R. Zemel and R. Salakhutdinov, “Siamese Neural Networks for One-Shot Image Recognition,” ICML Deep Learning Workshop, Vol. 2, 2015.

- J. Weiß, A. Santra, “One-Shot Learning for Robust Material Classification using Millimeter-Wave Radar System,” IEEE Sensors Letters, Vol. 2, No. 4, 2018.

- 26. J. Lien, N. Gillian, M. E. Karagozler, P. Amihood, C. Schwesig, E. Olson, H. Raja and I. Poupyrev, “Soli: Ubiquitous Gesture Sensing with Millimeter Wave Radar,” ACM Transactions on Graphics, Vol. 35, No. 4, July 2016.

- S. Wang, J. Song, J. Lien, I. Poupyrev and O. Hilliges, “Interacting with Soli: Exploring Fine-Grained Dynamic Gesture Recognition in the RF Spectrum,” Proceedings of the 29th Annual Symposium on User Interface Software and Technology, October 2016, pp. 851–860.

- H. S. Yeo, G. Flamich, P. Schrempf, D. Harris-Birtill and A. Quigley, “Radarcat: Radar Categorization for Input and Interaction,” Proceedings of the 29th Annual Symposium on User Interface Software and Technology, October 2016, pp. 833–841.

- K. Diederichs, A. Qiu and G. Shaker, “Wireless Biometric Individual Identification Utilizing Millimeter Waves,” IEEE Sensors Letters, Vol. 1, No. 1, February 2017, pp. 1–4.