In an era where wireless connectivity shapes our daily lives and demands for spectrum efficiency are ever-growing, understanding and managing signal interference is paramount. This capability is critical for addressing the challenge of spectrum occupancy and the potential interference between 5G and RADAR signals, which can have significant implications for wireless system performance. Specifically, the presence of a 5G cell tower near a runway, for example, may affect flight landing due to the proximity of 5G signals to RADAR altimeter signals in frequency.

The ability to classify signals accurately is essential for addressing issues such as spectrum occupancy and potential impact interference between different signals. For RF and microwave engineers, that means knowing the approach that combines semantic segmentation networks and deep learning techniques to classify signals in a wideband spectrum captured using a software-defined radio, which starts at understanding the workflow.

- Collect/gather signals for training, validation, and test framework.

- Train and validate the deep learning network.

- Test with signals in a wide spectrum.

- Identify and fix issues to achieve a system that captures a wider spectrum for signal classification.

Figure 1 shows the workflow to use deep learning for signal classification.

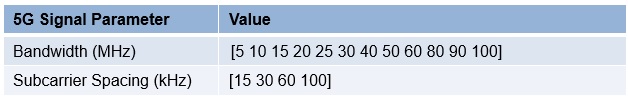

Table 1: Variability in 5G signal

Table 1: Variability in 5G signal

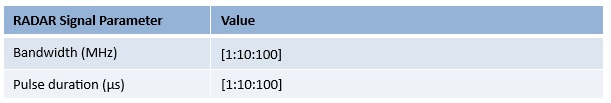

Table 2: Variability in RADAR signal. ©2024 The MathWorks, Inc

Table 2: Variability in RADAR signal. ©2024 The MathWorks, Inc

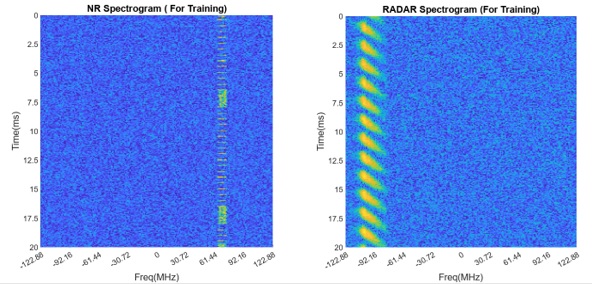

For this purpose, 1,000 frames from each category of signals, encompass solely 5G NR (New Radio), exclusively RADAR, and a hybrid of both 5G NR and RADAR signals was generated. These spectrograms were generated at sample rates of 245.76 MSPS. These frames were instrumental in creating a diverse dataset that mirrors real-world signal scenarios, thus enhancing the robustness and accuracy of the classification model. Figure 3 serves as a visual representation, showcasing the spectrograms for both 5G and RADAR signals. Importantly, this figure also illustrates the ground truth labels, which are critical for the supervised learning methodology employed in the approach, ensuring that the network effectively learns to distinguish between these signal types.

Figure 3: 5G and RADAR spectrograms used for training. ©2024 The MathWorks, Inc

Developing deep learning networks for signal classification can be achieved through various approaches using MATLAB. These methods range from coding networks from the ground up to utilizing the interactive Deep Network Designer app. Additionally, leveraging transfer learning by importing pre-existing networks into the Deep Learning Toolbox can significantly expedite the learning process. This technique utilizes the foundational knowledge of a pre-trained network, such as ResNet-50, that was employed in this application, to identify new patterns within novel datasets. Transfer learning not only accelerates the development cycle but also simplifies the training process compared to starting from scratch.

Upon establishing the network architecture, it’s essential to specify the training parameters to fine-tune the network’s performance. Defining training parameters typically involves setting the learning rate, number of epochs, batch size, and choosing an optimization algorithm. These parameters are critical for guiding the training process and ensuring that the network effectively learns from the training data.

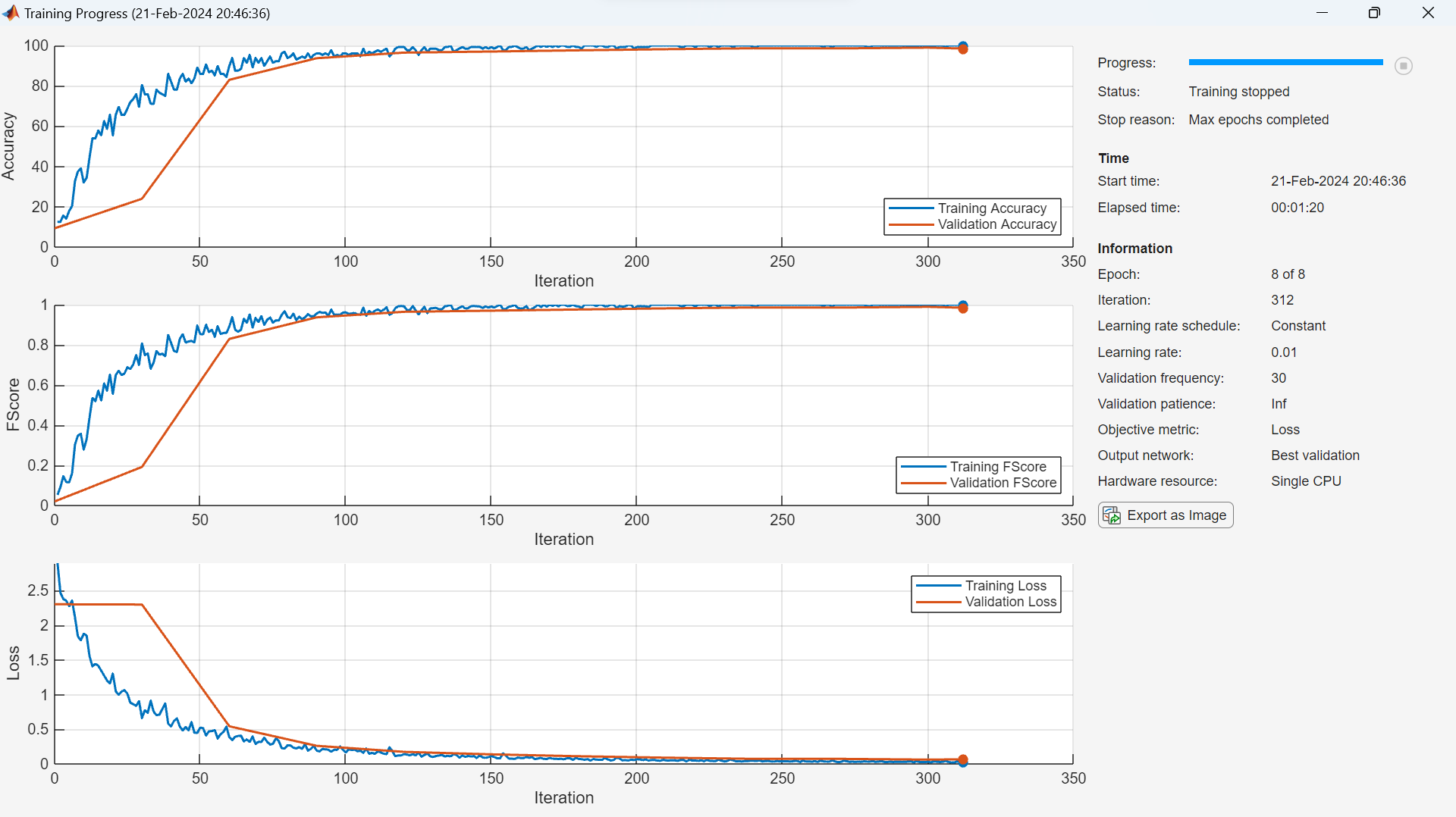

The network can then undergo training to learn from the data. Once trained, it can predict class labels or numeric responses based on the input data. Neural networks can be trained on various hardware configurations, including CPUs, GPUs, or even distributed systems across clusters or cloud platforms, offering flexibility based on available resources. Figure 4 shows training in progress for the signal classification application.

Figure 4: Training of a deep learning model with configuration parameters. ©2024 The MathWorks, Inc

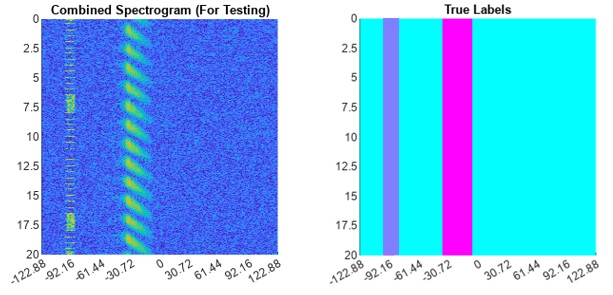

Figure 5: Spectrogram of test signals and Its ground truth. ©2024 The MathWorks, Inc

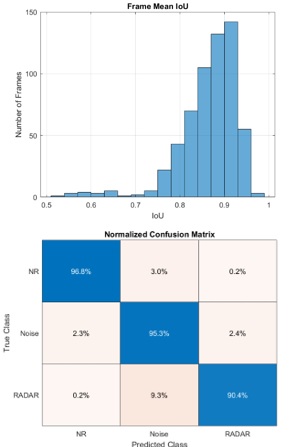

Evaluation of the network populates some performance matrices such as confusion matrix and mean intersection over union (IOU) as shown in Figure 6.

Figure 6: Intersection over union and confusion matrix for the trained network. ©2024 The MathWorks, Inc

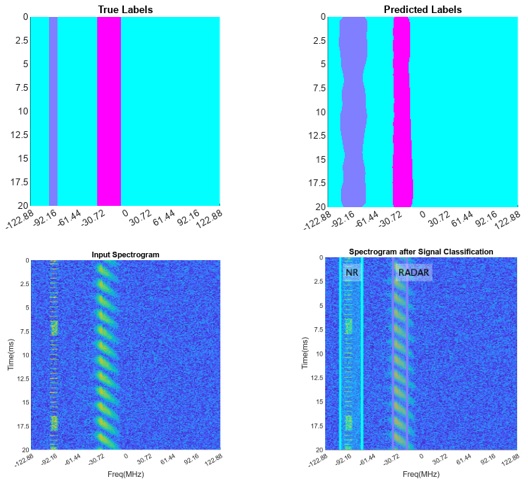

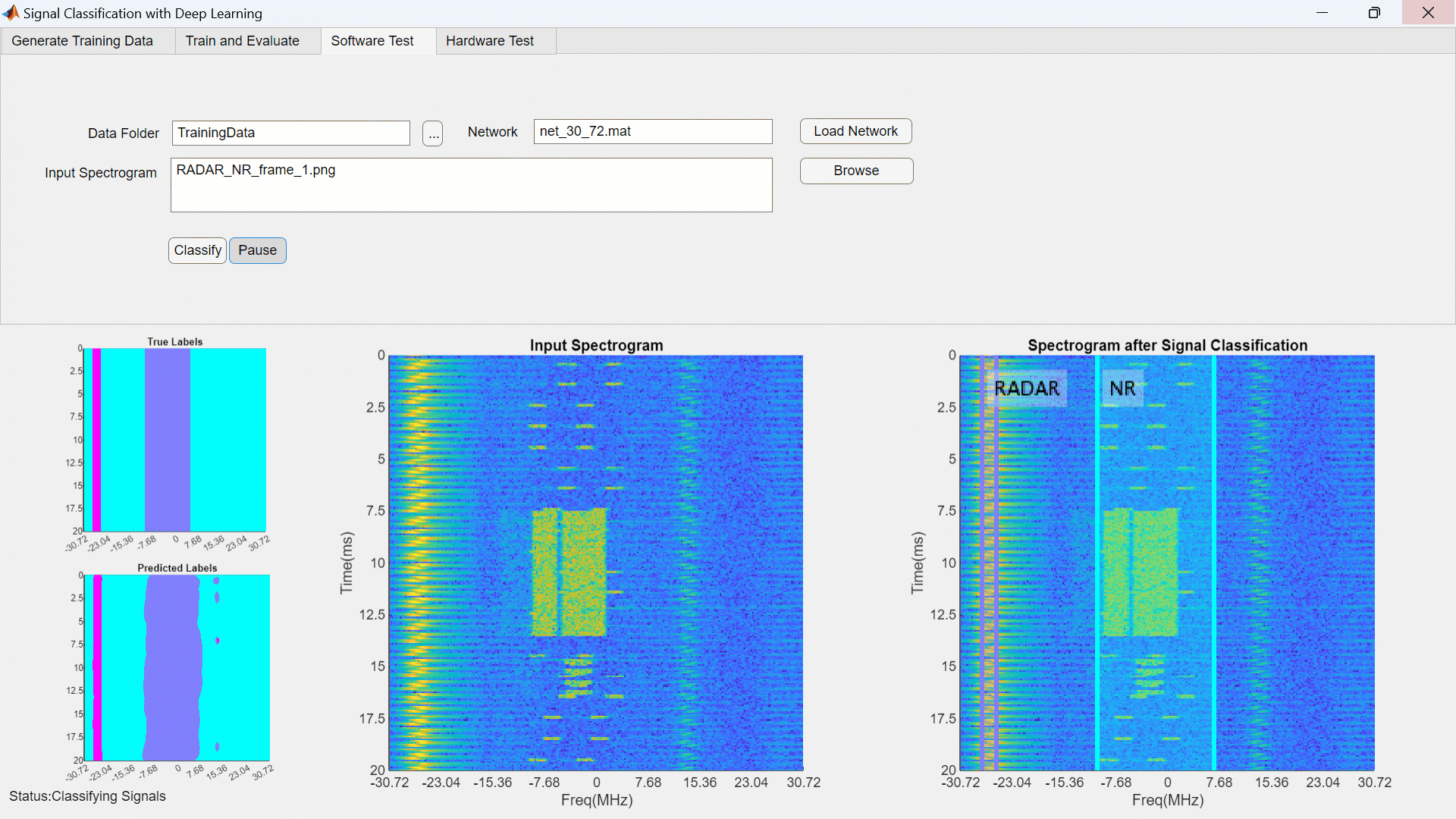

Figure 7 shows how the signal classification looks when the trained deep learning network identifies where in time and frequency the signal of interest is present.

Figure 7: Classifying input spectrogram for the signal of interest using trained network. ©2024 The MathWorks, Inc

Figure 7: Classifying input spectrogram for the signal of interest using trained network. ©2024 The MathWorks, Inc

Figure 8: User Interface to show the Signal Classification in action ©2024 The MathWorks, Inc

Employing deep learning for signal classification across a diverse spectrum represents a highly effective means of precisely identifying signals of interest in both temporal and spectral domains. This approach eliminates the need for demodulation and decoding, rendering it particularly suitable for real-time spectrum monitoring, electronic warfare (EW), and signals intelligence (SIGINT) applications.

To learn more about the topics covered in this Code & Waves blog and explore such designs, see the examples below or email abhishet@mathworks.com for more information.

- Spectrum Sensing with Deep Learning (Example code): Learn how to train a semantic segmentation network using deep learning for spectrum monitoring.

- Wireless Waveform Generator (Example code): Learn how to create, impair, visualize, and export modulated waveforms.

- Capture and Label NR and LTE Signals for AI Training (Example code): Learn how to scan, capture, and label bandwidths with 5G NR and LTE signals.

- Radar Toolbox (Website page): Design and test probabilistic and physics-based radar sensor models.