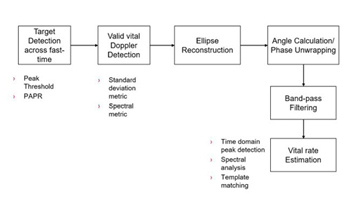

Figure 5 Processing pipeline for vital signal extraction and estimation using FMCW radar.

1. Spectral estimation techniques use the FFT of the filtered displacement signal. The peaks in the FFT spectrum within the heart rate frequency and breathing rate frequency provide estimates for heart rate and breathing rate respectively. Figure 3 illustrates vital-rate estimation using the spectral analysis approach.

2. Counting the peaks in the filtered time domain displacement signal provides an estimate of breathing and heart rate. Figure 4 shows an estimate of a vital signal frequency through peak counting of filtered time domain data. Red triangles indicate the peaks detected in a window of the heart signal.

Figure 5 summarizes the overall processing pipeline to extract and estimate vital sign signal rate through state-of-the-art signal processing.

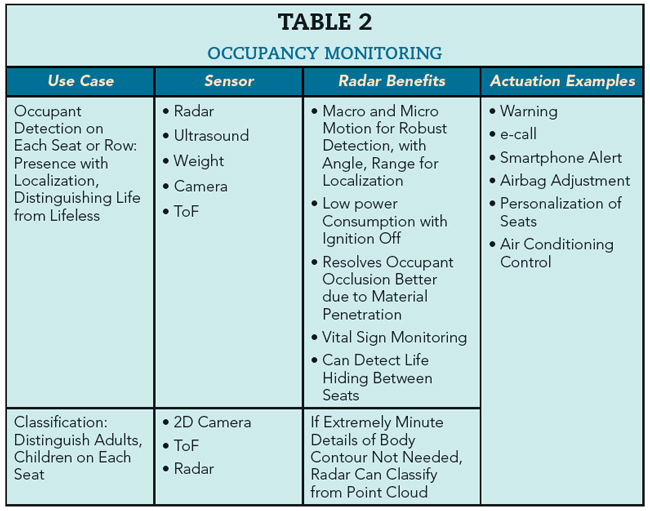

Occupancy Monitoring Systems

The concept of occupancy monitoring is relatively new compared to driver monitoring. The information from occupancy monitoring can be used for turning on seat heating, seat belt alarm detection, smart airbag deployment, left behind life warnings and automated air conditioning systems as outlined in Table 2. Occupancy monitoring systems may need to be active for some time after the ignition is turned off and hence overall system power consumption may be an important consideration.

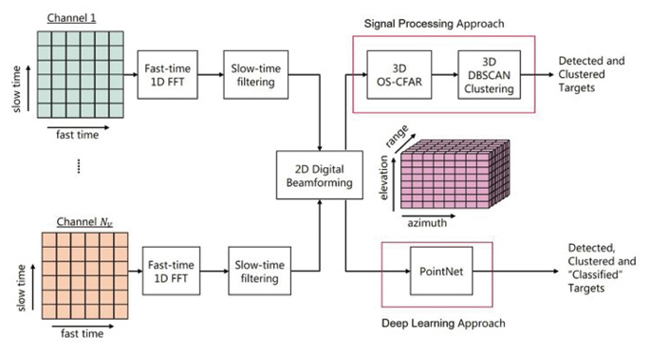

The use of radar for child presence and localization presents challenges. It requires multiple detections per object but radar point cloud data is sparse as compared to time-of-flight or camera data. Figure 6 shows the 3D radar point detection and classification processing pipeline for rear occupant analysis. Range processing is the first step, where fast time data is transformed into range bins with a 1D FFT. A windowing function is applied to the fast time data and then optionally zero-padded:

where Z is the fast time zero-padding, Ns is the number of analog-to-digital converter samples along fast time, r(l) and w(l) are the sample values and window functions, respectively, and Rin denotes the range spectrum value at the ith chirp and nth range bin.

Figure 6 Processing for range, elevation and azimuth followed by two signal processing paths for detection, clustering and classification of 3D point cloud data.

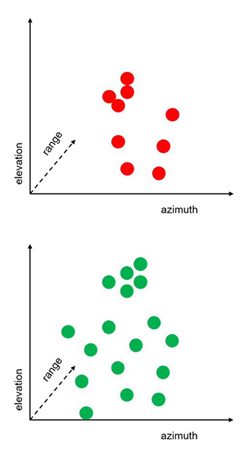

Figure 7 Point cloud spread of a sensed child (a) and an adult (b) in a vehicle.

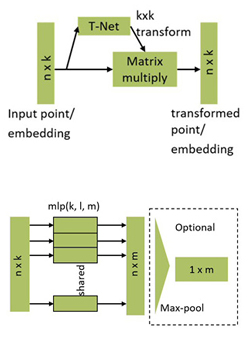

Figure 8 T-Net feature transformation in PointNet (a) and vanilla PointNet (b).

The fast time FFT along all the chirps is followed by slow time filtering to remove static targets and targets with velocity more than 5 Hz to detect the vital sign signal of the human target. The Ntxx Nrx range spectrums are stacked into a matrix along elevation φ and azimuth θ as per the position of the virtual antennas. The effective array factor matrix w(θ,φ) is calculated as the Kronecker product of the steering vector of the Tx array aTx (θ,φ) and the steering vector of the Rx array aRx (θ,φ) and used to calculate the target angles {θt,φt }Tt=1 for all range bins. The angle profiles for each range bin along θ and φ are generated through either Capon beamformer or maximal likelihood estimation.11

After 3D radar point cloud generation, either of two processing approaches might be employed. One involves using 3D ordered statistic constant false rate (3D OS-CFAR) detection followed by 3D density-based spatial clustering (DBSCAN) on a 3D radar data voxel for detection and clustering of the target data points as individual human targets. Alternately, the problem can be addressed by using a deep learning approach using the Doppler velocity and radar cross section values feeding into a PointNet architecture, a neural network that can perform 3D object detection instance segmentation. Compared to the signal processing approach, PointNet12 enables not only 3D bounding box estimation, but also classification of the detected-clustered target as an adult or child. Figure 7 shows the point cloud difference between a child and an adult, which must be determined for child left behind sensing and smart airbag deployment.

Given radar point clouds from the 2D Capon algorithm, the objective of the 3D deep neural network, is to classify and segment objects in 3D space. The radar point cloud is represented as a set of three-dimensional points:

where K are the number of detected target points.

For the classification task, the objective is to distinguish between child, adult, luggage or empty. To ensure invariance under geometric transformation, i.e. point clouds rotation should not alter the classification or segmentation results, a transformation T-Net is applied to transform the input feature vectors into transformed feature vectors. This operation is illustrated in Figure 8a where n points with k dimension are applied through T-Net learning transform parameters, k x k, which can be applied through matrix multiplication on the input feature vectors, resulting in output/transformed feature vectors, nk. The vanilla PointNet then tries to approximate the Hausdorff continuous symmetric function by using a multi-layer perception (MLP) and max pooling operation (see Figure 8b). A series combination of T-Net and vanilla PointNets are required to implement the DeepNet that can detect, classify and segment 3D point clouds for child sensing.

The transformation function at the input makes the input data points invariant to geometric transformations, while at the intermediate layers, the input embedding vectors are invariant to geometric transformations. The network architecture contains both the classification network and the segmentation network. The segmentation network marks each pixel with the class it belongs to and it takes input from local and global features followed by the sequence of vanilla PointNets.

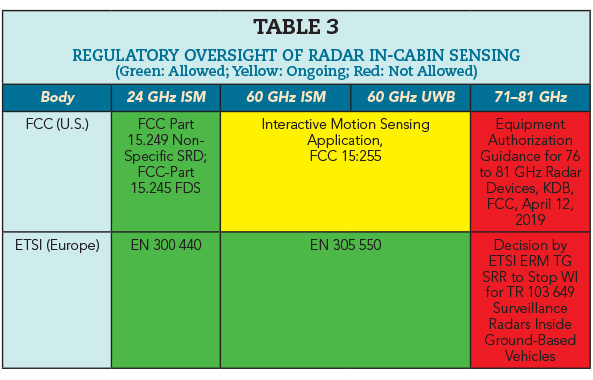

FREQUENCY REGULATIONS FOR IN-CABIN RADAR SYSTEMS IN USA AND EUROPE

Because radar in-cabin applications are novel, the automotive community is constantly discussing the best-suited frequency spectrum. Table 3 is an excerpt of ongoing regulatory activities mostly driven by system platform providers. These are subject to change based on day-to-day regulatory committee decisions.

CONCLUSION

In-cabin sensing is an emerging market, which is expected to see a potential boost due to regulations and legislations world-wide. Radar is seen as one of the promising technologies to address more than passive safety applications like left behind child detection and occupancy sensing. Novel signal processing and deep learning techniques will take these applications to the next level of robustness providing an ideal compromise between computational cost, degree of information needed for specific use cases and system power consumption. In the future, a multi-sensor fusion approach is expected to enable more robust systems by offering sensor redundancy.

Acknowledgment

Portions of the algorithm sections and figures are taken with permission from “Emerging Deep Learning Applications of Short-Range Radars,” published by Artech House. Web: https://us.artechhouse.com/Emerging-Deep-Learning-Applications-of-Short-Range-Radars-P2145.aspx.

References

- “Global Status Report on Road Safety,” World Health Organization, 2018, Web. https://www.who.int/violence_injury_prevention/road_safety_status/2018/GSRRS2018_Summary_EN.pdf. (Date Accessed: 20 April 2020).

- “Child Vehicular Heatstroke Deaths per Year,” Kidandcar.org, Web. https://www.kidsandcars.org/how-kids-get-hurt/heat-stroke/. (Date Accessed : 20 April 2020).

- “Leading Automaker’s Commitment to Implement Rear Seat Reminder Systems,” Alliance of Automobile Manufacturers, September 2019, Web. https://autoalliance.org/wp-content/uploads/2019/09/Rear_Seat_Reminder_System_Voluntary_Agreement_September_4_2019-1.pdf. (Date Accessed: 23 April 2020).

- “Regulation No 16 of the Economic Commission for Europe of the United Nations (UN/ECE),” Web. https://op.europa.eu/en/publication-detail/-/publication/d13b01c3-4962-478b-a710-215ee6dae2cb/language-en. (Date Accessed :19 April 2020).

- K. Diederichs, A. Qiu and G. Shaker, “Wireless Biometric Individual Identification Utilizing Millimeter Waves,” IEEE Sensors Letters, Vol. 1, No. 1, February 2017.

- C. Li, Z. Peng, T. Y. Huang, T. Fan, F. K. Wang, T. S. Horng, J. M. Munoz-Ferreras, R. Gomez-Garcia, L. Ran and J. Lin, “A Review on Recent Progress of Portable Short-Range Noncontact Microwave Radar Systems,” IEEE Transactions on Microwave Theory and Techniques, Vol. 65, No. 5, May 2017, pp. 1692–1706.

- M. Alizadeh, G. Shaker, J. C. M De Almeida, P. P. Morita and S. Safavi-Naeini, “Remote Monitoring of Human Vital Signs Using mm-Wave FMCW Radar,” IEEE Access, Vol. 7, April 2019, pp. 54958–54968.

- A. Singh, X. Gao, E. Yavari, M. Zakrzewski, X. H. Cao, V. M. Lubecke and O. Boric-Lubecke, “Data-Based Quadrature Imbalance Compensation for a CW Doppler Radar System,” IEEE Transactions on Microwave Theory and Techniques, Vol. 61, No. 4, April 2013, pp.1718–1724.

- M. Arsalan, A. Santra and C. Will, “Improved Contactless Heartbeat Estimation in FMCW Radar via Kalman Filter Tracking,” IEEE Sensors Letters, March 2020.

- A. Santra, R. V. Ulaganathan and T. Finke, “Short-Range Millimetric-Wave Radar System for Occupancy Sensing Application,” IEEE Sensors Letters, Vol. 2, No. 3, September 2018.

- A. Santra, I. Nasr and J. Kim, “Reinventing Radar: The Power of 4D Sensing,” Microwave Journal, Vol. 61, No. 12, December 2018, pp. 26–38.

- 12. C. R. Qi, H. Su, K. Mo and L. J. Guibas, “Pointnet: Deep Learning on Point Sets for 3D Classification and Segmentation,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, July 2017.